🔊 본 포스팅은 Apache Spark 3.0.1 공식 문서를 직접 해석하여 필자가 이해한 내용으로 재구성했습니다. 혹여나 컨텐츠 중 틀린 내용이 있다면 적극적인 피드백은 환영입니다! : )

이번 포스팅에서 다루어 볼 컨텐츠는 바로 Spark로 SQL을 이용할 수 있는 Spark SQL에 대한 내용이다. Spark는 이전 포스팅에서도 언급했다시피 기본적으로 RDD 자료구조를 갖고 있지만 Python의 Pandas 라이브러리에서 제공하는 DataFrame과 비슷한 구조의 DataFrame을 지원하고 있다.(이름도 DataFrame 으로 동일하다.) 추후에 다루겠지만 Spark는 Spark의 DataFrame을 Pandas의 DataFrame 형태로 바꾸어주는 것도 지원한다.

그럼 이제 Spark SQL을 통해 간단한 실습을 해보자.

1. 가장 먼저 할 일! SparkSession !

이전 포스팅에서도 말했다시피 가장 먼저 해야할 것은 SparkContext라는 스파크 객체를 만들어 주어야 한다. SparkContext를 만들어 주기 위해서 우선 SparkSession을 만들어 주자. 그리고 json 파일 형식으로 되어 있는 데이터를 읽어서 데이터의 스키마를 출력시켜보자.

from pyspark.sql import SparkSession

spark = SparkSession\

.builder\

.appName('Python Spark SQL basic example')\

.config('spark.some.config.option', 'some-value')\

.getOrCreate()

### Create json file using spark

# sparkContext로 객체 생성

sc = spark.sparkContext

# json 파일 읽어들이기

path = '/Users/younghun/Desktop/gitrepo/TIL/pyspark/people.json'

peopleDF = spark.read.json(path)

# printSchema()로 json파일의 스키마 형태 볼수 있음

peopleDF.printSchema()

참고로 printSchema()는 데이터를 스키마 형태로 보여주는 것인데 결과 화면은 다음과 같다.

2. SQL로 DataFrame 형태로 데이터 출력해보기

다음은 데이터베이스의 기능중 'View' 라는 가상의 테이블을 만들어 데이터프레임을 만들 수 있다.(데이터베이스에 대해 배운 적이 있다면 View 기능에 대해서 익숙할 것이다. 필자도 전공 수업 때 배웠던 가물가물한 기억이...)

가상의 테이블을 만들기 위해서는 createOrReplaceTempView("가상의테이블 이름") 을 수행하면 된다.

# 데이터프레임을 사용하는 임시의 view(가상의 테이블) 생성

peopleDF.createOrReplaceTempView("people")

# spark에서 제공하는 sql 메소드를 이용해 쿼리 날리기

# 쿼리문에서 people 테이블은 위에서 만들었던 view 테이블임!

teenagerNamesDF = spark.sql("SELECT name FROM people WHERE age BETWEEN 13 AND 19")

teenagerNamesDF.show()

참고로 만들어진 데이터프레임을 보기 위해서는 show() 메소드를 사용하면 된다.

View 테이블을 생성하는 메소드 중 Global Temporary View라는 것도 있다. 위에서 사용했던 일반적인 Temporary View 가상 테이블은 SaprkSession이 종료되면 삭제된다. 하지만 모든 SparkSession들 간에 View 가상 테이블을 공유하게 하고 싶다면 Global Temporary View를 사용하면 된다. 한 가지 주의해야 할 점이 있는데 Global Temporary View 테이블을 만들어주고 SQL로 데이터를 추출 할 때 테이블 이름 앞에 global\_temp 라는 키워드를 붙여줘야 함을 잊지말자!

# json 파일 읽어들이기

path = '/Users/younghun/Desktop/gitrepo/TIL/pyspark/people.json'

df = spark.read.json(path)

# Global Temporary View 생성

df.createOrReplaceGlobalTempView('people')

# 'global_temp' 라는 키워드 꼭 붙여주자!

sqlDF = spark.sql('SELECT * FROM global_temp.people')

sqlDF.show()

이건 부가적인 내용이지만 json 형식의 데이터를 RDD로 만들어 주고 데이터프레임으로 읽어오는 방식도 존재한다.

# 또한 데이터프레임은 RDD[String] 자료구조를 이용해서 json 데이터셋을 데이터프레임으로 만들 수 있음

jsonStrings = ['{"name": "Yin", "address":{"city":"Columbus", "state":"Ohio"}}']

# json -> RDD형식으로 만들기

otherPeopleRDD = sc.parallelize(jsonStrings)

# json파일 읽어오기

otherPeople = spark.read.json(otherPeopleRDD)

otherPeople.show()

3. Spark의 DataFrame의 칼럼에 접근해보자!

DataFrame Operations 라고도 하면 Untyped Dataset Operations 라고도 한다. Spark 내부에서 Dataset 과 DataFrame의 차이라고 한다면 Typed/Untyped 차이라고 한다. Typed dataset은 dataset으로 받아오는 데이터의 형태를 미리 정의해 놓은 것인 반면 Untyped dataset은 프로그램이 데이터의 형태를 추측해서 가져오는 것을 의미한다. 이 두 개는 에러를 잡아내는 시간 측면에서도 차이점이 존재하는데 자세한 내용은 여기를 참고하자.

해당 포스팅에서는 Dataset이 아닌 DataFrame에 대한 코드임을 알아두자. DataFrame의 칼럼에 접근하기 위한 방법은 크게 2가지가 존재한다. df.column 과 df\['column'\] 이 있는데 둘 중 후자의 방법을 더 많이 사용한다고 한다.(이것도 Pandas와 비슷한 듯 하다!)

# json 파일 읽어들이기

path = '/Users/younghun/Desktop/gitrepo/TIL/pyspark/people.json'

df = spark.read.json(path)

# name 칼럼 select 해서 살펴보기

df.select('name').show()

추가적으로 2개 이상의 칼럼을 추출하면서 기존의 칼럼에 연산을 가해 파생변수를 생성하여 추출하는 것도 가능하다.

# json 파일 읽어들이기

path = '/Users/younghun/Desktop/gitrepo/TIL/pyspark/people.json'

df = spark.read.json(path)

# name 칼럼 select 해서 살펴보기

df.select(df['name'], df['age']+1).show()

다음은 특정 조건을 만족하는 데이터만 추출하는 filter() 와 특정 칼럼으로 그룹핑을 수행해주는 groupBy() 메소드에 대한 예시 코드이다.

# json 파일 읽어들이기

path = '/Users/younghun/Desktop/gitrepo/TIL/pyspark/people.json'

df = spark.read.json(path)

# age가 20보다 큰 데이터만 추출

df.filter(df['age'] > 20).show()

# age 칼럼으로 그룹핑 하고 데이터의 개수를 집계해줌

df.groupBy('age').count().show()4. DataFrame의 Schema를 프로그래밍스럽게 명시해보자!

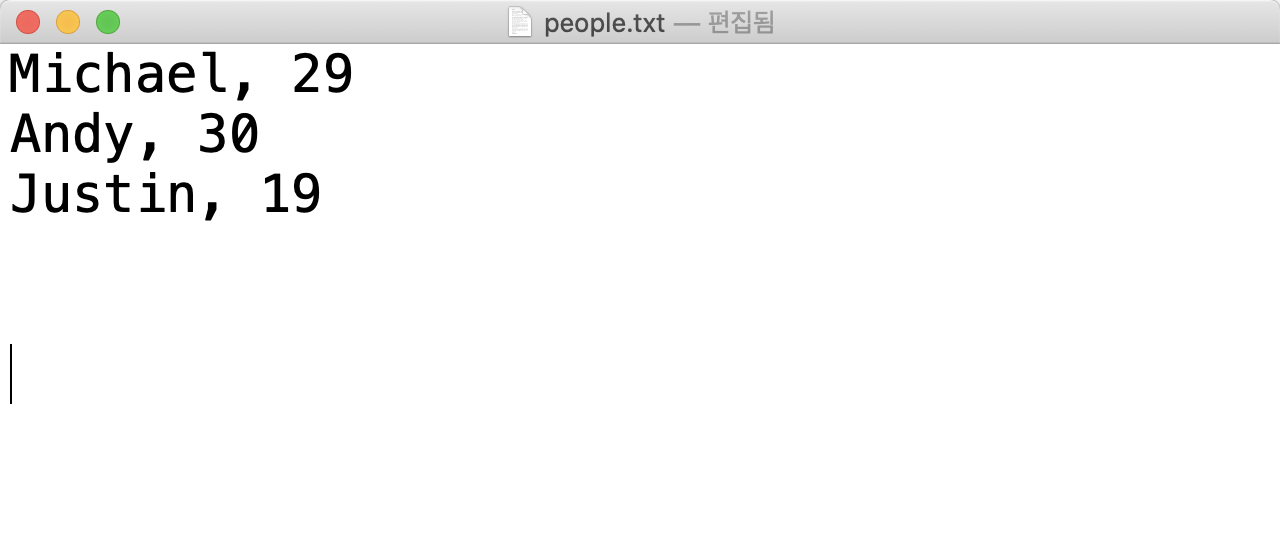

로드할 데이터 csv, txt, excel 등 여러가지 종류의 파일에 스키마 즉, 칼럼명(헤더)가 기입되어 있는 경우도 있지만 그렇지 않고 스키마만 따로 다른 파일에 담겨있거나 프로그래머가 직접 명시해주어야 하는 경우도 있다. 이러한 경우를 대비해 데이터 파일 외부에 명시되어 있는 스키마를 가져와 데이터프레임의 헤더에 삽입해주는 코드를 짜보자. 우선 데이터파일은 다음과 같다.

이제 txt 파일의 데이터를 로드하고 동시에 스키마를 공백이 포함된 문자열로 정의한 후 이를 데이터프레임에 삽입해보자.

from pyspark.sql.types import *

# SparkContext 객체 생성

sc = spark.sparkContext

# txt file 읽어오기

lines = sc.textFile('./people.txt')

parts = lines.map(lambda l: l.split(','))

## Step 1 ## => value들 처리

# 각 라인을 tuple( , ) 형태로 convert 해주기

people = parts.map(lambda p: (p[0], p[1].strip())) # name에서 공백 strip

## Step 2 ## => Schema들 처리

# 문자열로 인코딩된 스키마

schemaString = "name age"

# schemaString 요소를 loop돌면서 StructField로 만들기

fields = [StructField(field_name, StringType(), True) for field_name in schemaString.split()]

# StructField 여러개가 있는 리스트를 StrucType으로 만들기!

schema = StructType(fields)

## Step 3 ## => value와 schema 활용해 DataFrame 생성

# 위에서 만든 schema를 RDD의 schema로 적용

schemaPeople = spark.createDataFrame(people, schema)

# View Table 생성해 쿼리 날려서 데이터 추출해보기

schemaPeople.createOrReplaceTempView('people')

results = spark.sql("SELECT * FROM people")

results.show()

'Apache Spark' 카테고리의 다른 글

| [Infra] 데이터 인프라 구조와 Sources (0) | 2021.04.23 |

|---|---|

| [PySpark] 컨텐츠 기반 영화 추천 시스템 만들어보기 (18) | 2021.02.15 |

| [PySpark] PySpark로 Regression 모델 만들기 (0) | 2021.02.04 |

| [PySpark] 타이타닉 데이터로 분류 모델 만들기 (2) | 2021.02.03 |

| [PySpark] Apache Spark 와 RDD 자료구조 (0) | 2021.01.30 |